About Me

I’m currently a 4th-year Ph.D. student at Department of Computer Science, University of California, Los Angeles (UCLA), where I am very fortunate to be advised by Prof. Wei Wang. Previously I received my B.Sc. from Department of Math, UCLA, and M.Sc. from Department of Computer Science, UCLA. During that time, I’ve been an student researcher at UCLA-NLP group with Prof. Kai-Wei Chang.

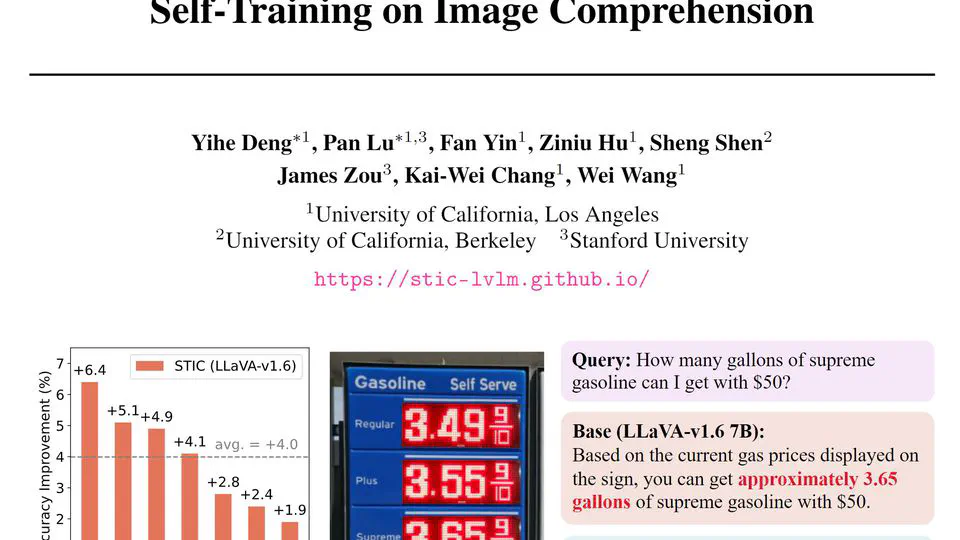

My research interests focus on post-training for Large Language Models (LLMs). Specifically, I’m interested in aspects including alignment fine-tuning, self-training and hallucination problems. I also work on robustness and multi-modal learning.

- Large Language Models (LLMs)

- LLM Post-training / Self-training

- Multi-modal Learning

PhD Computer Science

University of California, Los Angeles

MS Computer Science

University of California, Los Angeles

BS Mathematics of Computation

University of California, Los Angeles

I’m currently working on LLM post-training and focusing on self-training methods for both pure text LLMs and multi-modal LLMs. I’m generally interested in alignment and hallucination reduction for LLMs. I try to blog about papers I read.

Please reach out to discuss and collaborate at yihedeng at g dot ucla dot edu😃

*Equal Contribution